DEEP LEARNED FEATURE TECHNIQUE FOR HUMAN ACTION RECOGNITION IN THE MILITARY USING NEURAL NETWORK CLASSIFIER

Abstract

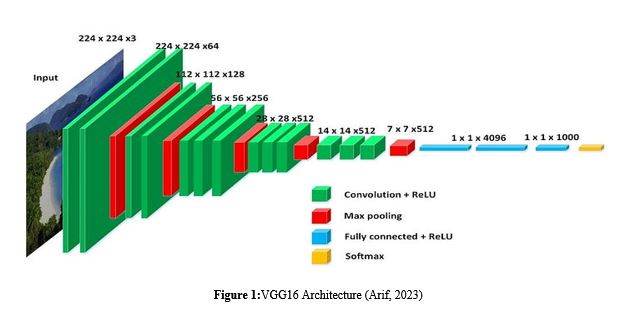

Assessing military trainee in an obstacle crossing competition requires an instructor to go along with participants or be strategically placed. These assessments sometimes suffer from fatigue or biasedness on the part of instructors. There is the need to have a system that can easily recognize various human actions involved in obstacle crossing and also give a fair assessment of the whole process. In this paper, VGG16 model features with neural network classifier is used to recognize human actions in a military obstacle-crossing competition video sequence involving multiple participants performing different activities. The dataset used was captured locally during military trainees’ obstacle-crossing exercises at a military training institution to achieve the objective. Images were segmented into background and foreground using a Grabcut-based segmentation algorithm. On the foreground masked images, features were extracted and used for classification with neural network. This method used the VGG16 model to automatically extract deep learned features at the max-pooling layer and the input presented to neural network classifier for classification into the various classes of human actions achieving 90% recognition accuracy which is at training time of 104.91secs. The accuracy obtained showed 3.6% performance improvement when compared to selected state-of-the-art model. The model also achieved 90.1% precision value and recall of 90.2%. Although many studies have focused on human recognition action recognition in several application areas, this study introduced a novel model for real time recognition of fifteen different classes of complex actions involving multiple participants during obstacle crossing competition in a military environment leveraging on the strength of deep learning and neural network classifier. This study will be of immense unbiased benefit to the military in the assessment of a trainee’s performance during training exercises or competitions.

Full text article

References

Alzubaidi, L., Zhang, J., Humaidi, A. J., Al-Dujaili, A., Duan, Y., Al-Shamma, O., Santamaría, J., Fadhel, M. A., Al-Amidie, M., & Farhan, L. (2021). Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. Journal of big Data, 8(1), 1-74. https://doi.org/10.1186/s40537-021-00444-8

Amin, U., Muhammad, K., Hussain, T., & Baik, S. W. (2021). Conflux LSTMs Network: A Novel Approach for Multi-View Action Recognition. Neurocomputing, 435, 321-329. https://doi.org/10.1016/j.neucom.2019.12.151

Ankita, Rani, S., Babbar, H., Coleman, S., Singh, A., & Aljahdali, H. M. (2021). An Efficient and Lightweight Deep Learning Model for Human Activity Recognition Using Smartphones. Sensors, 21(11), 1-17. https://doi.org/10.1109/JSEN.2023.3312478

Athavale, V. A., Gupta, S. C., Kumar, D., & Savita, S. (2021). Human action recognition using CNN-SVM model. Advances in Science and Technology, 105, 282-290. https://doi.org/10.4028/www.scientific.net/AST.105.282 Azzag, H. E., Zeroual, I. E., & Ladjailia, A. (2022). Real-Time Human Action Recognition Using Deep Learning. International Journal of Applied Evolutionary Computation (IJAEC), 13(2), 1-10. https://doi.org/ 10.4018/IJAEC.315633

Banoula, M. (2023). An Overview on Multilayer Perceptron (MLP). Retrieved 19 October 2023 from https://www.simplilearn.com/tutorials/deep-learning-tutorial/multilayer-perceptron

Bento, C. (2021). Multilayer Perceptron Explained with a Real-Life Example and Python Code: Sentiment Analysis. 2023(31 Ocotber 2023). https://towardsdatascience.com/multilayer-perceptron-explained-with-a-real-life-example-and-python-code-sentiment-analysis-cb408ee93141

Bi, H., Perello-Nieto, M., Santos-Rodriguez, R., Flach, P., & Craddock, I. (2023). An active semi-supervised deep learning model for human activity recognition. Journal of Ambient Intelligence and Humanized Computing, 14(10), 13049-13065. https://doi.org/10.1007/s12652-022-03768-2

Cook, D. J., Crandall, A. S., Thomas, B. L., & Krishnan, N. C. (2012). CASAS: A smart home in a box. Computer, 46(7), 62-69. https://doi.org/10.1109/MC.2012.328

Dahou, A., Al-qaness, M. A., Abd Elaziz, M., & Helmi, A. (2022). Human activity recognition in IoHT applications using arithmetic optimization algorithm and deep learning. Measurement, 199, 1-11. https://doi.org/10.1016/j.measurement.2022.111445

Dang, L. M., Min, K., Wang, H., Piran, M. J., Lee, C. H., & Moon, H. (2020). Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognition, 108, 1-23. https://doi.org/10.1016/j.patcog.2020.107561

Deep, S., & Zheng, X. (2019). Leveraging CNN and Transfer Learning for Vision-based Human Activity Recognition. 2019 29th International Telecommunication Networks and Applications Conference (ITNAC), Auckland, New Zealand (pp.1-4).IEEE. https://doi.org/10.1109/ITNAC46935.2019.9078016.

Dhiravidachelvi, E., Kumar, M. S., Anand, L. V., Pritima, D., Kadry, S., Kang, B.-G., & Nam, Y. (2023). Intelligent Deep Learning Enabled Human Activity Recognition for Improved Medical Services. COMPUTER SYSTEMS SCIENCE AND ENGINEERING, 44(2), 89-91. https://doi.org/10.32604/csse.2023.024612

Ding, W., Abdel-Basset, M., & Mohamed, R. (2023). HAR-DeepConvLG: Hybrid deep learning-based model for human activity recognition in IoT applications. Information Sciences, 646, 1-22. https://doi.org/10.1016/j.ins.2023.119394

Dutta, S. (2024). Understanding Classification MLPs: An In-Depth Exploration. Medium. Retrieved 1 FEB 2025 from https://medium.com/@sanjay_dutta/understanding-classification-mlps-an-in-depth-exploration-22ff9eb15f9f#:~:text=Classification%20MLPs%20are%20versatile%20and,recognition%20to%20complex%20object%20classification.

Garcia-Gonzalez, D., Rivero, D., Fernandez-Blanco, E., & Luaces, M. R. (2023). Deep learning models for real-life human activity recognition from smartphone sensor data. Internet of Things, 24, 1-22. https://doi.org/https://doi.org/10.1016/j.iot.2023.100925 Grigoryan, A. A. (2023). Understanding VGG Neural Networks: Architecture and Implementation. Retrieved 12 April 2024 from https://thegrigorian.medium.com/understanding-vgg-neural-networks-architecture-and-implementation-400d99a9e9ba

Guha, R., Khan, A. H., Singh, P. K., Sarkar, R., & Bhattacharjee, D. (2021). CGA: a new feature selection model for visual human action recognition. Neural Computing and Applications, 33(10), 5267-5286. https://doi.org/10.1007/s00521-020-05297-5

Gupta, N., Gupta, S. K., Pathak, R. K., Jain, V., Rashidi, P., & Suri, J. S. (2022). Human activity recognition in artificial intelligence framework: a narrative review. Artificial Intelligence Review, 55(6), 4755-4808. https://doi.org/10.1007/s10462-021-10116-x

Harahap, M., Damar, V., Yek, S., Michael, M., & Putra, M. R. (2023). Static and dynamic human activity recognition with VGG-16 pre-trained CNN model. Journal Infotel, 15(2), 164-168. https://doi.org/10.20895/infotel.v15i2.916

Hayat, A., Morgado-Dias, F., Bhuyan, B. P., & Tomar, R. (2022). Human Activity Recognition for Elderly People Using Machine and Deep Learning Approaches. Information, 13(6), 1-13. https://doi.org/10.3390/info13060275

Host, K., & Ivasic-Kos, M. (2022). An overview of Human Action Recognition in sports based on Computer Vision. Heliyon, 8(6), 1-25. https://doi.org/10.1016/j.heliyon.2022.e09633

Huang, W., Zhang, L., Wang, S., Wu, H., & Song, A. (2022). Deep ensemble learning for human activity recognition using wearable sensors via filter activation. ACM Transactions on Embedded Computing Systems, 22(1), 1-23. https://doi.org/10.1145/3551486

Irhebhude, M. E., Kolawole, A. O., & Abdullahi, F. (2021). Northern Nigeria Human Age Estimation From Facial Images Using Rotation Invariant Local Binary Pattern Features with Principal Component Analysis. Egyptian Computer Science Journal, 45(1).

Irhebhude, M. E., Kolawole, A. O., & Amos, G. N. (2023). Perspective on Dark-Skinned Emotion Recognition using Deep-Learned and Handcrafted Feature Techniques. In A. H. Seyyed (Ed.), Emotion Recognition - Recent Advances, New Perspectives and Applications (pp. 1-23). https://doi.org/10.5772/intechopen.109739

Irhebhude, M. E., Kolawole, A. O., & Goma, H. K. (2021). A gender recognition system using facial images with high dimensional data. Malaysian Journal of Applied Sciences, 6(1), 27-45. https://doi.org/10.37231/myjas.2021.6.1.275

Irhebhude, M. E., Kolawole, A. O., & Zubair, W. M. (2024). Sign Language Recognition Using Residual Network Architectures for Alphabet And Diagraph Classification. Journal of Computing and Social Informatics, 4(1), 11-25. https://doi.org/10.33736/jcsi.7986.2025

Isakava, T. (2022). A gentle introduction to human activity recognition. Retrieved 21 February 2024 from ndatalabs.com/blog/human-activity-recognition

Jaén-Vargas, M., Leiva, K. M. R., Fernandes, F., Gonçalves, S. B., Silva, M. T., Lopes, D. S., & Olmedo, J. J. S. (2022). Effects of sliding window variation in the performance of acceleration-based human activity recognition using deep learning models. PeerJ Computer Science, 8, 1-22. https://doi.org/10.7717/peerj-cs.1052

Jiang, Z.-P., Liu, Y.-Y., Shao, Z.-E., & Huang, K.-W. (2021). An improved VGG16 model for pneumonia image classification. Applied Sciences, 11(23), 1-19. https://doi.org/10.3390/app112311185

Jnagal, A. (2018). Image processing with deep learning- A quick start guide. https://www.infrrd.ai/blog/image-processing-with-deep-learning-a-quick-start-guide

Kavi, R., Kulathumani, V., Rohit, F., & Kecojevic, V. (2016). Multiview fusion for activity recognition using deep neural networks. Journal of Electronic Imaging, 25(4), 043010-043010. https://doi.org/10.1117/1.JEI.25.4.043010

Keshinro, B., Seong, Y., & Yi, S. (2022). Deep Learning-based human activity recognition using RGB images in Human-robot collaboration. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 66(1), 1548-1553. https://doi.org/10.1177/1071181322661186 Khowaja, S. A., & Lee, S.-L. (2020). Semantic Image Networks for Human Action Recognition. International Journal of Computer Vision, 128(2), 393-419. https://doi.org/10.1007/s11263-019-01248-3

Kolawole, A. O., Irhebhude, M. E., & Odion, P. O. (2025). Human Action Recognition in Military Obstacle Crossing Using HOG and Region-Based Descriptors. Journal of Computing Theories and Applications, 2(3), 410-426. https://doi.org/10.62411/jcta.12195

Kong, Y., & Fu, Y. (2022). Human Action Recognition and Prediction: A Survey. International Journal of Computer Vision, 130(5), 1366-1401. https://doi.org/10.1007/s11263-022-01594-9

Latumakulita, L. A., Lumintang, S. L., Salakia, D. T., Sentinuwo, S. R., Sambul, A. M., & Islam, N. (2022). Human Facial Expressions Identification using Convolutional Neural Network with VGG16 Architecture. Knowl. Eng. Data Sci., 5(1), 78-86.

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444. https://doi.org/10.1038/nature14539

Li, W., Wong, Y., Liu, A.-A., Li, Y., Su, Y.-T., & Kankanhalli, M. (2017). Multi-Camera Action Dataset for Cross-Camera Action Recognition Benchmarking. 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA (pp.187-196). IEEE. https://doi.org/10.1109/WACV.2017.28.

Li, X., Zhao, P., Wu, M., Chen, Z., & Zhang, L. (2021). Deep learning for human activity recognition. Neurocomputing, 444, 214-216. https://doi.org/10.1016/j.neucom.2020.11.020

Li, Y., & Wang, L. (2022). Human activity recognition based on residual network and BiLSTM. Sensors, 22(2), 1-18. https://doi.org/10.3390/s22020635

Li, Y., Yang, G., Su, Z., Li, S., & Wang, Y. (2023). Human Activity Recognition Based on Multienvironment Sensor Data. Information Fusion, 91, 47-63. https://doi.org/10.1016/j.inffus.2022.10.015

Liang, C., Lu, J., & Yan, W. Q. (2022). Human action recognition from digital videos based on deep learning. Proceedings of the 5th International Conference on Control and Computer Vision, Xiamen, China (pp. 150-155). Association for Computing Machinery. https://doi.org/10.1145/3561613.3561637.

Luo, F. (2020). Human activity classification using micro-Doppler signatures and ranging techniques [Doctoral Dissertation, Queen Mary University of London].

Manaf, A., & Singh, S. (2021). Computer Vision-Based Survey on Human Activity Recognition System, Challenges And Applications. 2021 3rd International Conference on Signal Processing and Communication (ICPSC), Coimbatore, India (pp.110-114). IEEE. https://doi.org/10.1109/ICSPC51351.2021.9451736.

Muhammad, K., Ullah, A., Imran, A. S., Sajjad, M., Kiran, M. S., Sannino, G., & de Albuquerque, V. H. C. (2021). Human action recognition using attention based LSTM network with dilated CNN features. Future Generation Computer Systems, 125, 820-830. https://doi.org/10.1016/j.future.2021.06.045

Nafea, O., Abdul, W., Muhammad, G., & Alsulaiman, M. (2021). Sensor-Based Human Activity Recognition with Spatio-Temporal Deep Learning. Sensors, 21(6), 1-20. https://www.mdpi.com/1424-8220/21/6/2141

Pardede, J., Sitohang, B., Akbar, S., & Khodra, M. L. (2021). Implementation of Transfer Learning Using VGG16 on Fruit Ripeness Detection. I.J. Intelligent Systems and Applications, 2, 52-61. https://doi.org/10.5815/ijisa.2021.02.04

Patel, C. I., Labana, D., Pandya, S., Modi, K., Ghayvat, H., & Awais, M. (2020). Histogram of Oriented Gradient-Based Fusion of Features for Human Action Recognition in Action Video Sequences. Sensors, 20(24), 1-32. https://doi.org/doi.org/10.3390/s20247299

Patil, S., Shelke, S., Joldapke, S., Jumle, V., & Chikhale, S. (2022). Review on human activity recognition for military restricted areas. International Journal for Research in Applied Science & Engineering Technology (IJRASET) 10(12), 603-606. https://doi.org/10.22214/ijraset.2022.47926

Perera, A. G., Law, Y. W., Ogunwa, T. T., & Chahl, J. (2020). A multiviewpoint outdoor dataset for human action recognition. IEEE Transactions on Human-Machine Systems, 50(5), 405-413. https://doi.org/10.1109/THMS.2020.2971958

Poulose, A., Kim, J. H., & Han, D. S. (2022). HIT HAR: Human Image Threshing Machine for Human Activity Recognition Using Deep Learning Models. Computational Intelligence and Neuroscience, 2022, 1-21. https://doi.org/10.1155/2022/1808990

Putra, P. U., Shima, K., & Shimatani, K. (2022). A deep neural network model for multi-view human activity recognition. PloS one, 17(1), 1-20. https://doi.org/10.1371/journal.pone.0262181

Qassim, H., Verma, A., & Feinzimer, D. (2018). Compressed residual-VGG16 CNN model for big data places image recognition. 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, USA (pp. 169-175).IEEE. https://doi.org/10.1109/CCWC.2018.8301729.

Qi, W., Wang, N., Su, H., & Aliverti, A. (2022). DCNN based human activity recognition framework with depth vision guiding. Neurocomputing, 486, 261-271. https://doi.org/10.1016/j.neucom.2021.11.044

Raj, R., & Kos, A. (2023). An improved human activity recognition technique based on convolutional neural network. Scientific Reports, 13(1), 1-19. https://doi.org/10.1038/s41598-023-49739-1

Salunke, U., Shelke, S., Joldapke, S., Chikhale, S., & Jumle, V. (2023). Implementation Paper on Human Activity Recognition for Military Restricted Areas. International Journal for Research in Applied Science & Engineering Technology (IJRASET), 11(v), 1-7. https://doi.org/10.22214/ijraset.2023.52658

Sansano, E., Montoliu, R., & Belmonte Fernandez, O. (2020). A study of deep neural networks for human activity recognition. Computational Intelligence, 36(3), 1113-1139. https://doi.org/10.1111/coin.12318

Schuldt, C., Laptev, I., & Caputo, B. (2004). Recognizing human actions: a local SVM approach. Proceedings of the 17th International Conference on Pattern Recognition, 2004. ICPR 2004., Cambridge, UK (pp. 32-36). IEEE. https://doi.org/10.1109/ICPR.2004.1334462.

Shiraly, K. (2022). Latest Advances in Video-Based Human Activity Recognition Modern HAR in Video & Images. Retrieved 17 February 2024 from https://www.width.ai/post/human-activity-recognition

Simonyan, K., & Zisserman, A. (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR)10.1109/ACPR.2015.7486599, Kuala Lumpur, Malaysia (pp.730-734). IEEE. https://doi.org/10.48550/arXiv.1409.1556.

Surek, G. A. S., Seman, L. O., Stefenon, S. F., Mariani, V. C., & Coelho, L. d. S. (2023). Video-based human activity recognition using deep learning approaches. Sensors, 23(14), 1-15. https://doi.org/10.3390/s23146384

Taud, H., & Mas, J. F. (2018). Multilayer Perceptron (MLP). In M. T. Camacho Olmedo, M. Paegelow, J.-F. Mas, & F. Escobar (Eds.), Geomatic approaches for modeling land change scenarios (pp. 451-455). Springer International Publishing. https://doi.org/10.1007/978-3-319-60801-3_27

Thakur, R. (2024). Beginner’s Guide to VGG16 Implementation in Keras. Retrieved 13 APril 2024 from https://builtin.com/machine-learning/vgg16

Tripathi, M. (2021, 2022). Image Processing using CNN: A beginners guide. Retrieved 31 December 2022 from https://www.analyticsvidhya.com/blog/2021/06/image-processing-using-cnn-a-beginners-guide/

Tufek, N., Yalcin, M., Altintas, M., Kalaoglu, F., Li, Y., & Bahadir, S. K. (2019). Human action recognition using deep learning methods on limited sensory data. IEEE Sensors Journal, 20(6), 3101-3112. https://doi.org/10.1109/JSEN.2019.2956901

Vrskova, R., Hudec, R., Kamencay, P., & Sykora, P. (2022). A new approach for abnormal human activities recognition based on ConvLSTM architecture. Sensors, 22(8), 2946. Vrskova, R., Kamencay, P., Hudec, R., & Sykora, P. (2023). A New Deep-Learning Method for Human Activity Recognition. Sensors, 23(5), 1-17. https://doi.org/10.3390/s23052816

Wang, J., Nie, X., Xia, Y., Wu, Y., & Zhu, S.-C. (2014). Cross-view action modeling, learning and recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, Columbus, United States (pp. 2649-2656). IEEE. https://doi.irg/10.1109/CVPR.2014.339.

Weinland, D., Ronfard, R., & Boyer, E. (2006). Free viewpoint action recognition using motion history volumes. Computer Vision and Image Understanding, 104(2-3), 249-257. https://doi.org/10.1016/j.cviu.2006.07.013

Xia, L., Chen, C.-C., & Aggarwal, J. K. (2012). View invariant human action recognition using histograms of 3d joints. 2012 IEEE computer society conference on computer vision and pattern recognition workshops, Providence, RI, USA.

Authors

Copyright (c) 2025 Adeola O Kolawole, Martins E Irhebhude, Philip O Odion

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License [CC BY-NC-SA 4.0] that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work, with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online.